2015-07-27 Mon

■ docker-machine を少し触ってみる [Docker]

そういえば、docker-machine もインストールしてみました。

docker-machine は、boot2docker が、ローカルの VirtualBoxを通じてしか Docker host を作れないのとは違って、driver が対応すれば、クラウド上でも Docker Host を作れます。

インストール方法は

Docker Machine

https://docs.docker.com/machine/

にある通りです。

$ curl -L https://github.com/docker/machine/releases/download/v0.3.0/docker-machine_darwin-amd64 > /usr/local/bin/docker-machine

$ chmod +x /usr/local/bin/docker-machine

version はこんな感じ

$ docker-machine -v

docker-machine version 0.3.0 (0a251fe)

とりあえず、boot2docker と同じく VirtualBox 上の VM に、Docker host を作ってみます。

asteroid:~ kunitake$ docker-machine create --driver virtualbox dev

Creating CA: /Users/kunitake/.docker/machine/certs/ca.pem

Creating client certificate: /Users/kunitake/.docker/machine/certs/cert.pem

Image cache does not exist, creating it at /Users/kunitake/.docker/machine/cache...

No default boot2docker iso found locally, downloading the latest release...

Downloading https://github.com/boot2docker/boot2docker/releases/download/v1.7.1/boot2docker.iso to /Users/kunitake/.docker/machine/cache/boot2docker.iso...

Creating VirtualBox VM...

Creating SSH key...

Starting VirtualBox VM...

Starting VM...

To see how to connect Docker to this machine, run: docker-machine env dev

メッセージにあるように、"docker-machine env dev" とすることで、docker client が docker daemon に接続するための環境変数を得ることができます。

Dockerホストは、boot2docker と同じイメージのようです。

asteroid:~ kunitake$ docker-machine create --driver virtualbox hogehoge

Creating VirtualBox VM...

Creating SSH key...

Starting VirtualBox VM...

Starting VM...

〜以下略〜

とすると、hogehogeというVMが作られるので、独立した別々の Docker host を用意できますね。

boot2docker では、

$ boot2docker ssh

とすることで、Docker Host にログインできましたが、同じような要領で、

asteroid:~ kunitake$ docker-machine ssh hogehoge

## .

## ## ## ==

## ## ## ## ##==

/"""""""""""""""""\___/

~~~ {~~ ~~~~ ~~~ ~~~~ ~~~ ~ / ===- ~~~

\______ o __/

\ \ __/

\____\_______/

_ _ ____ _ _

|__ ___ ___ | |_|___ \ __| | ___ ___| | _____ _ __

'_ \ / _ \ / _ \| __| __) / _` |/ _ \ / __| |/ / _ \ '__|

|_) | (_) | (_) | |_ / __/ (_| | (_) | (__| < __/ |

_.__/ \___/ \___/ \__|_____\__,_|\___/ \___|_|\_\___|_|

Boot2Docker version 1.7.1, build master : c202798 - Wed Jul 15 00:16:02 UTC 2015

Docker version 1.7.1, build 786b29d

docker@hogehoge:~$

と、イメージ名を指定することで個別に Docker Host にログインすることができます。

ちなみに、docker-machine では、Docker host に名前を作られる関係上、たとえ 1つのイメージしかなくても、省略するとこんな感じで怒られます。

asteroid:~ kunitake$ docker-machine ssh

Error: Please specify a machine name.

管理上の Docker Host 一覧を見るには

$ docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM

dev * virtualbox Running tcp://192.168.99.100:2376

hogehoge virtualbox Running tcp://192.168.99.101:2376

止めるには、kill、でいいのかな?

asteroid:bin kunitake$ docker-machine kill hogehoge

asteroid:bin kunitake$ docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM

dev * virtualbox Running tcp://192.168.99.100:2376

hogehoge virtualbox Stopped

asteroid:bin kunitake$ docker-machine kill dev

asteroid:bin kunitake$ docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM

dev virtualbox Stopped

hogehoge virtualbox Stopped

あ、killじゃなくて、stop があったので、stop の方が良かったかも。消すのは、rm です

asteroid:bin kunitake$ docker-machine rm hogehoge

Successfully removed hogehoge

asteroid:bin kunitake$ docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM

dev virtualbox Stopped

止めた Docker Host は、start で起動できます。

asteroid:bin kunitake$ docker-machine start dev

Starting VM...

Too many retries. Last error: Maximum number of retries (60) exceeded

ありゃ?再開できない……

asteroid:bin kunitake$ docker-machine env dev

Unexpected error getting machine url: exit status 255

むむ?

asteroid:bin kunitake$ docker-machine start dev

Starting VM...

Too many retries. Last error: Maximum number of retries (60) exceeded

asteroid:bin kunitake$ docker-machine upgrade dev

Error getting SSH command: exit status 255

asteroid:bin kunitake$ docker-machine stop dev

asteroid:bin kunitake$ docker-machine upgrade dev

Error: machine must be running to upgrade.

うーん、手詰まり感が。

バグなのか、環境依存的な問題なのか……この当たりが問題なく動けば、汎用性が高くてヨサゲなんだけど。

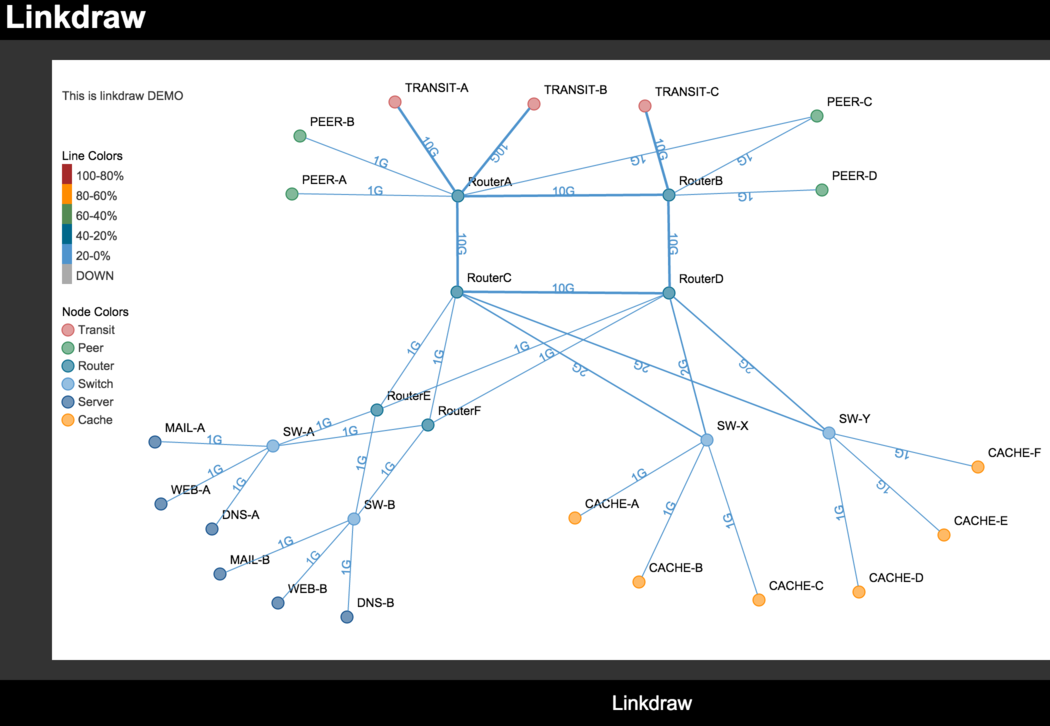

■ linkdrawを動かしてみる [linkdraw]

私もこの喜びを共有してみたい> "インフラエンジニアがUnityをやるべきたった一つの理由" #engineer #iaas http://t.co/OCuBVbcytf @SlideShareさんから

— kunitake (@kunitake) 2015, 7月 27とツイートしたところ、

@kunitake linkdraw使いましょう。 https://t.co/e777A4uyM8

— Kouhei Maeda (@mkouhei) 2015, 7月 27とのリプライを頂いた。とりあえず手元に入れてみる。インストール方法は

https://github.com/mtoshi/linkdraw/wiki

に書いてあるので、それを参考にインストール。基本的にWebサーバにインストールするツールなので、/Library/WebServer/Docuemnt/linkdraw に入れる感じで。

$ cd ~/develop/github.com

$ git clone git://github.com/mtoshi/linkdraw.git

$ cd linkdraw

$ less ~/utils/setup.sh

$ sudo mkdir /Library/WebServer/Documents/linkdraw

$ sudo bash utils/setup.sh /Library/WebServer/Documents/linkdraw

http://localhost/linkdraw/demo.html

demo を見る限り、cacti の Weathermap と置き換え & 見通しが良くなりそう。

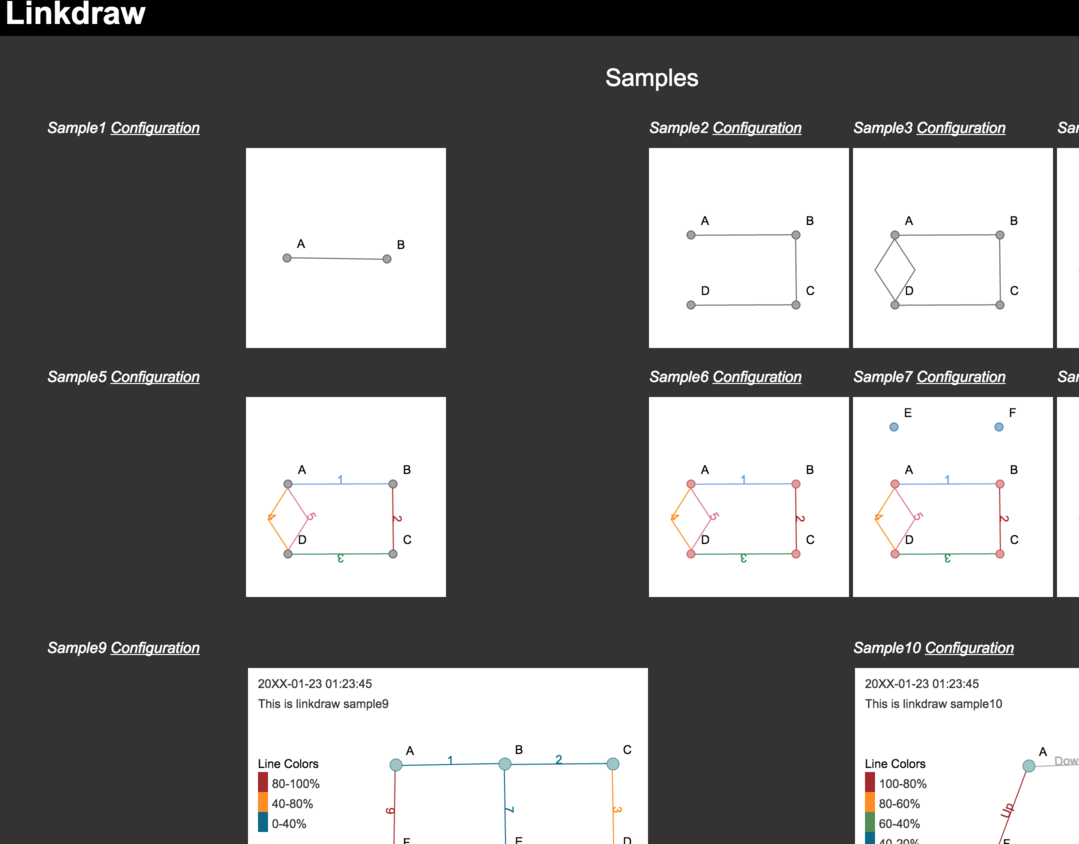

http://localhost/linkdraw/sample.html

こっちの sample が設定チュートリアルになってます。描写としてはSVGを利用していて、同一ディレクトリ内の

- configs/xxxx_config.json

が設定ファイルで

- positions/xxxx_position.json

が位置情報になってるようです。

さらに

@kunitake 大統一Debian勉強会の時の資料がこれです。 http://t.co/TYbZneUWaO あと、おまけで私がここでこれ使ったお遊びをしてます http://t.co/Q63OWpN2VD

— Kouhei Maeda (@mkouhei) 2015, 7月 27も教えて頂きました。

http://pgraph.palmtb.net/

は、Ruby 版(gem版?)も欲しいなぁ……ただ、今のところ私は、Ruby に愛がない。

■ 「Docker基本操作」の裏側で起きてること、の触りだけを調べる [Docker]

- [2015-07-22-2]Docker基本操作

- [2015-07-23-2]Docker基本操作」の裏側で起きてること、を調べる前準備

の続き。

とりあえずわかりやすいように、docker コンテナと dockerイメージをすべて消しておきます。

boot2docker で作られた Dockerホストは、コンテナ用のファイルシステムとして aufs を利用しています。なので、docker イメージを削除すると、、aufs 周りはすべて消されます(以下、docker host上の操作は docker@boot2docker で表記)

docker@boot2docker:~$ sudo find /mnt/sda1/var/lib/docker/aufs/ -print

/mnt/sda1/var/lib/docker/aufs/

/mnt/sda1/var/lib/docker/aufs/diff

/mnt/sda1/var/lib/docker/aufs/layers

/mnt/sda1/var/lib/docker/aufs/mnt

Docker は、Control Groups でリソース配分を行っているので、そのあたりも眺めておきます。

docker@boot2docker:~$ find /sys/fs/cgroup/

/sys/fs/cgroup/

/sys/fs/cgroup/net_prio

/sys/fs/cgroup/net_prio/tasks

/sys/fs/cgroup/net_prio/net_prio.ifpriomap

/sys/fs/cgroup/net_prio/cgroup.procs

/sys/fs/cgroup/net_prio/release_agent

/sys/fs/cgroup/net_prio/net_prio.prioidx

/sys/fs/cgroup/net_prio/cgroup.clone_children

/sys/fs/cgroup/net_prio/cgroup.sane_behavior

/sys/fs/cgroup/net_prio/notify_on_release

/sys/fs/cgroup/perf_event

/sys/fs/cgroup/perf_event/tasks

/sys/fs/cgroup/perf_event/cgroup.procs

/sys/fs/cgroup/perf_event/release_agent

/sys/fs/cgroup/perf_event/docker

/sys/fs/cgroup/perf_event/docker/tasks

/sys/fs/cgroup/perf_event/docker/cgroup.procs

/sys/fs/cgroup/perf_event/docker/cgroup.clone_children

/sys/fs/cgroup/perf_event/docker/notify_on_release

/sys/fs/cgroup/perf_event/cgroup.clone_children

/sys/fs/cgroup/perf_event/cgroup.sane_behavior

/sys/fs/cgroup/perf_event/notify_on_release

/sys/fs/cgroup/net_cls

/sys/fs/cgroup/net_cls/tasks

/sys/fs/cgroup/net_cls/net_cls.classid

/sys/fs/cgroup/net_cls/cgroup.procs

/sys/fs/cgroup/net_cls/release_agent

/sys/fs/cgroup/net_cls/cgroup.clone_children

/sys/fs/cgroup/net_cls/cgroup.sane_behavior

/sys/fs/cgroup/net_cls/notify_on_release

/sys/fs/cgroup/freezer

/sys/fs/cgroup/freezer/tasks

/sys/fs/cgroup/freezer/cgroup.procs

/sys/fs/cgroup/freezer/release_agent

/sys/fs/cgroup/freezer/docker

/sys/fs/cgroup/freezer/docker/tasks

/sys/fs/cgroup/freezer/docker/cgroup.procs

/sys/fs/cgroup/freezer/docker/freezer.state

/sys/fs/cgroup/freezer/docker/cgroup.clone_children

/sys/fs/cgroup/freezer/docker/freezer.parent_freezing

/sys/fs/cgroup/freezer/docker/notify_on_release

/sys/fs/cgroup/freezer/docker/freezer.self_freezing

/sys/fs/cgroup/freezer/cgroup.clone_children

/sys/fs/cgroup/freezer/cgroup.sane_behavior

/sys/fs/cgroup/freezer/notify_on_release

/sys/fs/cgroup/devices

/sys/fs/cgroup/devices/tasks

/sys/fs/cgroup/devices/cgroup.procs

/sys/fs/cgroup/devices/devices.allow

/sys/fs/cgroup/devices/release_agent

/sys/fs/cgroup/devices/docker

/sys/fs/cgroup/devices/docker/tasks

/sys/fs/cgroup/devices/docker/cgroup.procs

/sys/fs/cgroup/devices/docker/devices.allow

/sys/fs/cgroup/devices/docker/cgroup.clone_children

/sys/fs/cgroup/devices/docker/notify_on_release

/sys/fs/cgroup/devices/docker/devices.deny

/sys/fs/cgroup/devices/docker/devices.list

/sys/fs/cgroup/devices/cgroup.clone_children

/sys/fs/cgroup/devices/cgroup.sane_behavior

/sys/fs/cgroup/devices/notify_on_release

/sys/fs/cgroup/devices/devices.deny

/sys/fs/cgroup/devices/devices.list

/sys/fs/cgroup/memory

/sys/fs/cgroup/memory/memory.pressure_level

/sys/fs/cgroup/memory/memory.kmem.max_usage_in_bytes

/sys/fs/cgroup/memory/memory.use_hierarchy

/sys/fs/cgroup/memory/memory.swappiness

/sys/fs/cgroup/memory/memory.memsw.failcnt

/sys/fs/cgroup/memory/tasks

/sys/fs/cgroup/memory/memory.limit_in_bytes

/sys/fs/cgroup/memory/memory.memsw.max_usage_in_bytes

/sys/fs/cgroup/memory/cgroup.procs

/sys/fs/cgroup/memory/release_agent

/sys/fs/cgroup/memory/memory.usage_in_bytes

/sys/fs/cgroup/memory/memory.memsw.limit_in_bytes

/sys/fs/cgroup/memory/docker

/sys/fs/cgroup/memory/docker/memory.pressure_level

/sys/fs/cgroup/memory/docker/memory.kmem.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/memory.use_hierarchy

/sys/fs/cgroup/memory/docker/memory.swappiness

/sys/fs/cgroup/memory/docker/memory.memsw.failcnt

/sys/fs/cgroup/memory/docker/tasks

/sys/fs/cgroup/memory/docker/memory.limit_in_bytes

/sys/fs/cgroup/memory/docker/memory.memsw.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/cgroup.procs

/sys/fs/cgroup/memory/docker/memory.usage_in_bytes

/sys/fs/cgroup/memory/docker/memory.memsw.limit_in_bytes

/sys/fs/cgroup/memory/docker/memory.failcnt

/sys/fs/cgroup/memory/docker/memory.kmem.limit_in_bytes

/sys/fs/cgroup/memory/docker/memory.force_empty

/sys/fs/cgroup/memory/docker/memory.kmem.slabinfo

/sys/fs/cgroup/memory/docker/memory.memsw.usage_in_bytes

/sys/fs/cgroup/memory/docker/memory.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/cgroup.clone_children

/sys/fs/cgroup/memory/docker/memory.kmem.tcp.limit_in_bytes

/sys/fs/cgroup/memory/docker/memory.oom_control

/sys/fs/cgroup/memory/docker/memory.kmem.tcp.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/memory.kmem.failcnt

/sys/fs/cgroup/memory/docker/notify_on_release

/sys/fs/cgroup/memory/docker/memory.kmem.usage_in_bytes

/sys/fs/cgroup/memory/docker/memory.stat

/sys/fs/cgroup/memory/docker/cgroup.event_control

/sys/fs/cgroup/memory/docker/memory.kmem.tcp.usage_in_bytes

/sys/fs/cgroup/memory/docker/memory.move_charge_at_immigrate

/sys/fs/cgroup/memory/docker/memory.soft_limit_in_bytes

/sys/fs/cgroup/memory/docker/memory.kmem.tcp.failcnt

/sys/fs/cgroup/memory/memory.failcnt

/sys/fs/cgroup/memory/memory.kmem.limit_in_bytes

/sys/fs/cgroup/memory/memory.force_empty

/sys/fs/cgroup/memory/memory.kmem.slabinfo

/sys/fs/cgroup/memory/memory.memsw.usage_in_bytes

/sys/fs/cgroup/memory/memory.max_usage_in_bytes

/sys/fs/cgroup/memory/cgroup.clone_children

/sys/fs/cgroup/memory/memory.kmem.tcp.limit_in_bytes

/sys/fs/cgroup/memory/memory.oom_control

/sys/fs/cgroup/memory/cgroup.sane_behavior

/sys/fs/cgroup/memory/memory.kmem.tcp.max_usage_in_bytes

/sys/fs/cgroup/memory/memory.kmem.failcnt

/sys/fs/cgroup/memory/notify_on_release

/sys/fs/cgroup/memory/memory.kmem.usage_in_bytes

/sys/fs/cgroup/memory/memory.stat

/sys/fs/cgroup/memory/cgroup.event_control

/sys/fs/cgroup/memory/memory.kmem.tcp.usage_in_bytes

/sys/fs/cgroup/memory/memory.move_charge_at_immigrate

/sys/fs/cgroup/memory/memory.soft_limit_in_bytes

/sys/fs/cgroup/memory/memory.kmem.tcp.failcnt

/sys/fs/cgroup/blkio

/sys/fs/cgroup/blkio/tasks

/sys/fs/cgroup/blkio/blkio.reset_stats

/sys/fs/cgroup/blkio/cgroup.procs

/sys/fs/cgroup/blkio/release_agent

/sys/fs/cgroup/blkio/docker

/sys/fs/cgroup/blkio/docker/tasks

/sys/fs/cgroup/blkio/docker/blkio.reset_stats

/sys/fs/cgroup/blkio/docker/cgroup.procs

/sys/fs/cgroup/blkio/docker/cgroup.clone_children

/sys/fs/cgroup/blkio/docker/notify_on_release

/sys/fs/cgroup/blkio/cgroup.clone_children

/sys/fs/cgroup/blkio/cgroup.sane_behavior

/sys/fs/cgroup/blkio/notify_on_release

/sys/fs/cgroup/cpuacct

/sys/fs/cgroup/cpuacct/cpuacct.usage_percpu

/sys/fs/cgroup/cpuacct/tasks

/sys/fs/cgroup/cpuacct/cgroup.procs

/sys/fs/cgroup/cpuacct/release_agent

/sys/fs/cgroup/cpuacct/docker

/sys/fs/cgroup/cpuacct/docker/cpuacct.usage_percpu

/sys/fs/cgroup/cpuacct/docker/tasks

/sys/fs/cgroup/cpuacct/docker/cgroup.procs

/sys/fs/cgroup/cpuacct/docker/cpuacct.stat

/sys/fs/cgroup/cpuacct/docker/cgroup.clone_children

/sys/fs/cgroup/cpuacct/docker/notify_on_release

/sys/fs/cgroup/cpuacct/docker/cpuacct.usage

/sys/fs/cgroup/cpuacct/cpuacct.stat

/sys/fs/cgroup/cpuacct/cgroup.clone_children

/sys/fs/cgroup/cpuacct/cgroup.sane_behavior

/sys/fs/cgroup/cpuacct/notify_on_release

/sys/fs/cgroup/cpuacct/cpuacct.usage

/sys/fs/cgroup/cpu

/sys/fs/cgroup/cpu/tasks

/sys/fs/cgroup/cpu/cgroup.procs

/sys/fs/cgroup/cpu/cpu.rt_runtime_us

/sys/fs/cgroup/cpu/cpu.shares

/sys/fs/cgroup/cpu/release_agent

/sys/fs/cgroup/cpu/docker

/sys/fs/cgroup/cpu/docker/tasks

/sys/fs/cgroup/cpu/docker/cgroup.procs

/sys/fs/cgroup/cpu/docker/cpu.rt_runtime_us

/sys/fs/cgroup/cpu/docker/cpu.shares

/sys/fs/cgroup/cpu/docker/cpu.cfs_quota_us

/sys/fs/cgroup/cpu/docker/cpu.stat

/sys/fs/cgroup/cpu/docker/cgroup.clone_children

/sys/fs/cgroup/cpu/docker/cpu.cfs_period_us

/sys/fs/cgroup/cpu/docker/cpu.rt_period_us

/sys/fs/cgroup/cpu/docker/notify_on_release

/sys/fs/cgroup/cpu/cpu.cfs_quota_us

/sys/fs/cgroup/cpu/cpu.stat

/sys/fs/cgroup/cpu/cgroup.clone_children

/sys/fs/cgroup/cpu/cpu.cfs_period_us

/sys/fs/cgroup/cpu/cgroup.sane_behavior

/sys/fs/cgroup/cpu/cpu.rt_period_us

/sys/fs/cgroup/cpu/notify_on_release

/sys/fs/cgroup/cpuset

/sys/fs/cgroup/cpuset/cpuset.mem_hardwall

/sys/fs/cgroup/cpuset/tasks

/sys/fs/cgroup/cpuset/cgroup.procs

/sys/fs/cgroup/cpuset/release_agent

/sys/fs/cgroup/cpuset/cpuset.cpus

/sys/fs/cgroup/cpuset/cpuset.mems

/sys/fs/cgroup/cpuset/cpuset.sched_relax_domain_level

/sys/fs/cgroup/cpuset/docker

/sys/fs/cgroup/cpuset/docker/cpuset.mem_hardwall

/sys/fs/cgroup/cpuset/docker/tasks

/sys/fs/cgroup/cpuset/docker/cgroup.procs

/sys/fs/cgroup/cpuset/docker/cpuset.cpus

/sys/fs/cgroup/cpuset/docker/cpuset.mems

/sys/fs/cgroup/cpuset/docker/cpuset.sched_relax_domain_level

/sys/fs/cgroup/cpuset/docker/cpuset.memory_pressure

/sys/fs/cgroup/cpuset/docker/cpuset.memory_spread_page

/sys/fs/cgroup/cpuset/docker/cpuset.memory_spread_slab

/sys/fs/cgroup/cpuset/docker/cpuset.cpu_exclusive

/sys/fs/cgroup/cpuset/docker/cpuset.mem_exclusive

/sys/fs/cgroup/cpuset/docker/cpuset.effective_cpus

/sys/fs/cgroup/cpuset/docker/cpuset.effective_mems

/sys/fs/cgroup/cpuset/docker/cgroup.clone_children

/sys/fs/cgroup/cpuset/docker/cpuset.sched_load_balance

/sys/fs/cgroup/cpuset/docker/notify_on_release

/sys/fs/cgroup/cpuset/docker/cpuset.memory_migrate

/sys/fs/cgroup/cpuset/cpuset.memory_pressure

/sys/fs/cgroup/cpuset/cpuset.memory_spread_page

/sys/fs/cgroup/cpuset/cpuset.memory_spread_slab

/sys/fs/cgroup/cpuset/cpuset.cpu_exclusive

/sys/fs/cgroup/cpuset/cpuset.mem_exclusive

/sys/fs/cgroup/cpuset/cpuset.effective_cpus

/sys/fs/cgroup/cpuset/cpuset.effective_mems

/sys/fs/cgroup/cpuset/cgroup.clone_children

/sys/fs/cgroup/cpuset/cpuset.sched_load_balance

/sys/fs/cgroup/cpuset/cgroup.sane_behavior

/sys/fs/cgroup/cpuset/notify_on_release

/sys/fs/cgroup/cpuset/cpuset.memory_pressure_enabled

/sys/fs/cgroup/cpuset/cpuset.memory_migrate

Docker で最初定義されているサブシステムは

- perf_event

- freezer

- devices

- memory

- blkio

- cpuacct

- cpu

- cpuset

cpusets を眺めてみる。

$ cat /proc/cpuinfo | grep ^processor |wc -l

8

使っているマシンは 8コア。

docker@boot2docker:~$ cd /sys/fs/cgroup/cpuset/docker

docker@boot2docker:/sys/fs/cgroup/cpuset/docker$ cat cpuset.cpus

0-7

docker@boot2docker:/sys/fs/cgroup/cpuset/docker$ cat cpuset.cpu_exclusive

0

Docker グループに割り当てられているCPUは、8コア。この8コアは、専有しているわけではなく、他のグループからも利用可能になっている、と。

cpu を眺めてみる。

docker@boot2docker:~$ cd /sys/fs/cgroup/cpu/docker

docker@boot2docker:/sys/fs/cgroup/cpu/docker$ cat cpu.shares

1024

docker@boot2docker:/sys/fs/cgroup/cpu/docker$ cat cpu.cfs_period_us

100000

docker@boot2docker:/sys/fs/cgroup/cpu/docker$ cat cpu.cfs_quota_us

-1

cpu.shares は、標準のままですね。cpu.cfs_period_us は、100000マイクロ秒 = 0.1秒かな。でも cpu.cfs_quota_us が -1 なので、制限なしですかねぇ。

memory 周り

docker@boot2docker:~$ cd /sys/fs/cgroup/memory/docker

docker@boot2docker:/sys/fs/cgroup/memory/docker$ free

total used free shared buff/cache available

Mem: 2049924 29976 1831564 109012 188384 1808108

Swap: 1461664 0 1461664

$ cat memory.limit_in_bytes

9223372036854771712

docker@boot2docker:/sys/fs/cgroup/memory/docker$ cat memory.memsw.limit_in_bytes

9223372036854771712

特に制限は掛かってなさそう。

とりあえずこの状態で、Docker client から、Docker image の hello-world を pull してみる。

asteroid:~ kunitake$ docker pull hello-world:latest

latest: Pulling from hello-world

a8219747be10: Pull complete

91c95931e552: Already exists

hello-world:latest: The image you are pulling has been verified. Important: image verification is a tech preview feature and should not be relied on to provide security.

Digest: sha256:aa03e5d0d5553b4c3473e89c8619cf79df368babd18681cf5daeb82aab55838d

Status: Downloaded newer image for hello-world:latest

asteroid:~ kunitake$ docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

hello-world latest 91c95931e552 3 months ago 910 B

あれ?消したつもりが、どっかにキャッシュ持ってたかな?

~# find /mnt/sda1/var/lib/docker/aufs -type f -print |xargs ls -l

-rwxr-xr-x 1 root root 910 Jul 9 2014 /mnt/sda1/var/lib/docker/aufs/diff/a8219747be10611d65b7c693f48e7222c0bf54b5df8467d3f99003611afa1fd8/hello

-rw-r--r-- 1 root root 65 Jul 27 03:18 /mnt/sda1/var/lib/docker/aufs/layers/91c95931e552b11604fea91c2f537284149ec32fff0f700a4769cfd31d7696ae

-rw-r--r-- 1 root root 0 Jul 27 03:18 /mnt/sda1/var/lib/docker/aufs/layers/a8219747be10611d65b7c693f48e7222c0bf54b5df8467d3f99003611afa1fd8

# cat /mnt/sda1/var/lib/docker/aufs/layers/91c95931e552b11604fea91c2f537284149ec32fff0f700a4769cfd31d7696ae

a8219747be10611d65b7c693f48e7222c0bf54b5df8467d3f99003611afa1fd8

Dockerコンテナと aufs とは、どこで結びついてるのかな?

さて、docker runしてみます。

$ docker run hello-world:latest

Hello from Docker.

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(Assuming it was not already locally available.)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

For more examples and ideas, visit:

http://docs.docker.com/userguide/

実行直後、cgroup 周りに変化はなさそうですが、aufs 周りは、変化が有りました。

root@boot2docker:~# find /mnt/sda1/var/lib/docker/aufs -type f -print |xargs ls -l

-rwxr-xr-x 1 root root 910 Jul 9 2014 /mnt/sda1/var/lib/docker/aufs/diff/a8219747be10611d65b7c693f48e7222c0bf54b5df8467d3f99003611afa1fd8/hello

-rwxr-xr-x 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init/.dockerenv

-rwxr-xr-x 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init/.dockerinit

-r--r--r-- 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init/.wh..wh.aufs

-rwxr-xr-x 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init/dev/console

-rwxr-xr-x 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init/etc/hostname

-rwxr-xr-x 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init/etc/hosts

-rwxr-xr-x 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init/etc/resolv.conf

-r--r--r-- 1 root root 0 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/diff/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792/.wh..wh.aufs

-rw-r--r-- 1 root root 65 Jul 27 03:18 /mnt/sda1/var/lib/docker/aufs/layers/91c95931e552b11604fea91c2f537284149ec32fff0f700a4769cfd31d7696ae

-rw-r--r-- 1 root root 0 Jul 27 03:18 /mnt/sda1/var/lib/docker/aufs/layers/a8219747be10611d65b7c693f48e7222c0bf54b5df8467d3f99003611afa1fd8

-rw-r--r-- 1 root root 200 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/layers/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792

-rw-r--r-- 1 root root 130 Jul 27 03:26 /mnt/sda1/var/lib/docker/aufs/layers/c17d1149bc19ae3a3e044c79e103775882106efb1139d35eb0c7f3d0cbfd1792-init

cgroup がコンテナ毎に設定されるかどうかは、docker run をバックグランドで動作させないとわからなさそうですね。"docker run -d nginx:latest" してみます。

すると、各サブシステムの下にあった docker グループの下に、Dockerコンテナ IDで、サブグループが作られてました。docker run hello-world の時にも、同じようにして一瞬作られていたのかも。

root@boot2docker:~# find /sys/fs/cgroup/ -print |grep 69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/perf_event/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/perf_event/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/perf_event/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/perf_event/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/perf_event/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/freezer.state

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/freezer.parent_freezing

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/freezer/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/freezer.self_freezing

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/devices.allow

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/devices.deny

/sys/fs/cgroup/devices/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/devices.list

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.pressure_level

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.use_hierarchy

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.swappiness

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.memsw.failcnt

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.limit_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.memsw.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.memsw.limit_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.failcnt

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.limit_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.force_empty

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.slabinfo

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.memsw.usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.tcp.limit_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.oom_control

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.tcp.max_usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.failcnt

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.stat

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.event_control

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.tcp.usage_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.move_charge_at_immigrate

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.soft_limit_in_bytes

/sys/fs/cgroup/memory/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/memory.kmem.tcp.failcnt

/sys/fs/cgroup/blkio/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/blkio/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/blkio/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/blkio.reset_stats

/sys/fs/cgroup/blkio/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/blkio/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/blkio/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuacct.usage_percpu

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuacct.stat

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/cpuacct/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuacct.usage

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpu.rt_runtime_us

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpu.shares

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpu.cfs_quota_us

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpu.stat

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpu.cfs_period_us

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpu.rt_period_us

/sys/fs/cgroup/cpu/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.mem_hardwall

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/tasks

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.procs

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.cpus

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.mems

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.sched_relax_domain_level

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.memory_pressure

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.memory_spread_page

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.memory_spread_slab

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.cpu_exclusive

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.mem_exclusive

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.effective_cpus

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.effective_mems

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cgroup.clone_children

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.sched_load_balance

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/notify_on_release

/sys/fs/cgroup/cpuset/docker/69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4/cpuset.memory_migrate

ついでに、Dockerコンテナから見た時のプロセス情報

asteroid:~ kunitake$ docker exec -it 69bd35d3768f2a6868a3cb7621c8586926b55cc7c8baf428b1f5ed5de21f41d4 /bin/bash

root@69bd35d3768f:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.2 31500 5144 ? Ss 03:30 0:00 nginx: master process nginx -g daemon off;

nginx 6 0.0 0.1 31876 2788 ? S 03:30 0:00 nginx: worker process

root 7 2.5 0.1 20212 3156 ? Ss 03:37 0:00 /bin/bash

root 11 0.0 0.1 17492 2176 ? R+ 03:37 0:00 ps aux

Dockerホストから見たnginxのプロセス情報

root@boot2docker:~# ps aux |grep [n]ginx

root 7168 0.0 0.2 31500 5144 ? Ss 03:30 0:00 nginx: master process nginx -g daemon off;

104 7174 0.0 0.1 31876 2788 ? S 03:30 0:00 nginx: worker process

このPIDの対応もどっかに Docker が管理情報持ってるのかな?

という感じで、ざっと雰囲気が掴めたので、ドキュメントを読み込んでいこう。

| IPv4/IPv6 meter |

検索キーワードは複数指定できます

| 思ったより安い……時もある、Amazon |

カテゴリ

- Ajax (14)

- Amazon (1)

- Apache (28)

- Backup (4)

- Benchmark (5)

- Blog (3)

- Book (22)

- C (10)

- CGI (2)

- CPAN (8)

- CSS (2)

- Catalyst (8)

- CentOS (2)

- Chalow (11)

- CheatSheet (3)

- Computer (2)

- Crowi (1)

- DB (8)

- DBIC (10)

- DNS (6)

- Debian (31)

- Design (5)

- Django (9)

- Docker (7)

- Emacs (22)

- English (13)

- Excel (3)

- FileSystem (3)

- Firefox (19)

- Flash (2)

- Food (25)

- Framework (3)

- GTD (4)

- Gadget (6)

- Gmail (1)

- Google (19)

- HTML (2)

- Hacker (1)

- Health (22)

- Howto (5)

- IBM (3)

- IETF (2)

- IPv6 (45)

- JANOG (9)

- Java (8)

- Javascript (27)

- LDAP (4)

- Language (4)

- Life (4)

- Lifehack (3)

- Linix (1)

- Linux (127)

- Lisp (2)

- Mac (8)

- Mail (3)

- Manuscript (1)

- Monitoring (1)

- Munin (1)

- MySQL (23)

- Network (19)

- PHP (13)

- Perl (69)

- PostgreSQL (8)

- Programing (5)

- Python (42)

- RPM (1)

- RSS (4)

- React (1)

- RedHat (2)

- Regexp (3)

- Ruby (9)

- Samba (1)

- Scheme (3)

- Security (53)

- Server (4)

- Shell (6)

- Storage (6)

- Subversion (9)

- TAG (2)

- TIPS (26)

- TLS (1)

- Test (6)

- ThinkPad (9)

- Tomcat (3)

- Tools (18)

- Trac (9)

- Troubleshooting (2)

- Tuning (6)

- VMware (12)

- VPN (2)

- Vault (4)

- Vim (3)

- Vmware (5)

- VoIP (1)

- Vyatta (2)

- Web (28)

- WiFi (2)

- Wiki (3)

- Windows (10)

- XML (2)

- XSLT (1)

- Xen (2)

- XenServer (11)

- Zabbix (1)

- junoser (1)

- linkdraw (1)

- python (1)

- raspberry (2)

- skark (1)

- ssh (4)

- systemd (1)

- あとで読む (5)

- その他 (67)

- よもやま (29)

- アサマシ (24)

- イベント (4)

- ゲーム (1)

- ストリーム (2)

- セマンティック (1)

- ダイエット (1)

- ネタ (98)

- 仮想化 (11)

- 開発 (73)

- 環境 (3)

- 管理 (7)

- 休暇 (5)

- 携帯 (8)

- 検索 (3)

- 酒 (3)

- 小物 (10)

- 投資 (1)

- 文字コード (6)

- 勉強会 (1)